Azure Synapse Analytics

Azure Synapse Analytics is a limitless analytics service that brings together data integration, enterprise data warehousing, and big data analytics. Note that Skyvia supports only Dedicated SQL Pool.

Azure Synapse Analytics-Specific Features and Limitations

Skyvia has the following limitations for Azure Synapse Analytics:

- Synchronization is not supported for Azure Synapse Analytics.

- Skyvia supports only Dedicated SQL Pool. It does not support Serverless SQL Pool.

Azure Synapse Analytics Connections

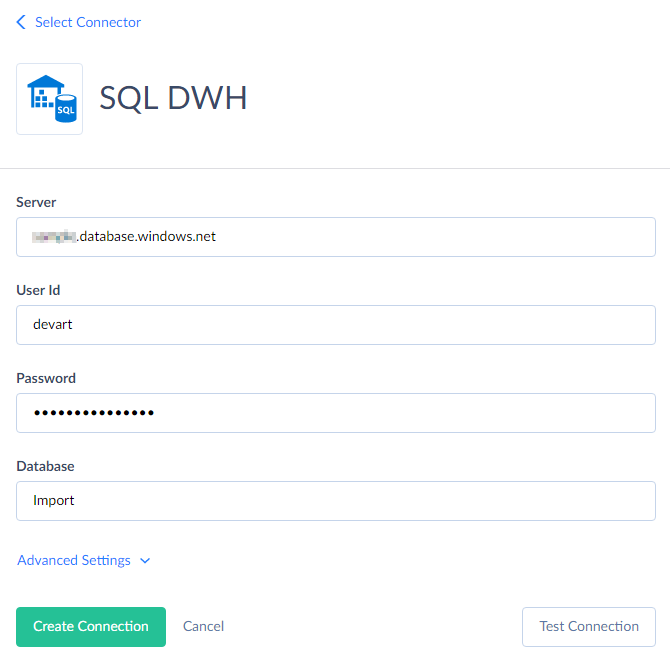

To connect to Azure Synapse Analytics, you need to specify the database server host name or IP address, user name and password to log in with, and database name. If you need to import data to Azure Synapse Analytics or perform replication, you will also need to specify some advanced parameters described below.

You need to specify the following parameters for Azure Synapse Analytics connection:

- Server — name or IP address of the Azure Synapse Analytics server to connect to. Leaving this field empty means using localhost.

- User Id — user name to log in with.

- Password — password to log in with.

- Database — name of the database you want to connect to.

If you want to execute Import integrations, inserting data to Azure Synapse Analytics, or Replication integrations via this connection, you need to click Advanced Settings and set parameters for connecting to Azure Blob storage service. This is necessary, because for these operations Skyvia uses PolyBase to ensure fastest data loading to Azure Synapse Analytics. It loads data to Azure Blob Storage as CSV files and uses PolyBase to import data from these files to Azure Synapse Analytics, and then deletes the CSV files after the import. Thus, you need to specify the Storage Account and Storage Account Key to use. You may also optionally change the protocol to use from default https to http, but this is not recommended. Here are the descriptions of these parameters:

- Storage Account — Azure storage account name.

- Storage Account Key — your 512-bit storage access key.

- Storage Endpoints Protocol — this parameter determines the protocol to use (HTTPS or HTTP).

You can also optionally set the following parameters in Advanced Settings:

-

Command Timeout — this parameter specifies the wait time before terminating an attempt to execute a command and generating an error. Note that it is the time to wait for any server reply since the command was sent to a server, and it doesn't include the time necessary to fetch data if the command selects some data.

-

Connection Timeout — this parameter determines the time (in seconds) to wait while trying to establish a connection before terminating the attempt and reporting an error. Generally you don't need to modify it, but in some specific cases when a connection to the database server is not good you may try increasing its value.

-

Use Bulk Import — this parameter affects import integrations with the INSERT, UPDATE and DELETE operations and with Azure Synapse Analytics as a target.

By default, such integrations import data using PolyBase to quickly load large volumes of data to Azure Synapse Analytics. Skyvia writes data into multiple temporary CSV files, upload them to Azure Blob Storage and then tells SQL Data Warehouse to import data from these CSV files. These actions are performed simultaneously, providing the best performance. After the CSV files are imported, they are deleted. However, when data are imported this way, it's not possible to obtain a per-record error log.

If you disable bulk import, Skyvia will use usual INSERT, UPDATE, and DELETE statements for importing data. This allows you to obtain a per-record error log, but provides far less performance. Thus, disabling bulk import is not recommended and should only be considered if you need to import not that many records and need to have a per-record error log or you do not want to use Azure Blob Storage for temporary files.

Supported Actions and Actions Specifics

Azure Synapse Analytics connector supports the following actions:

-

Execute Command in Source, Lookup, and Target Data Flow components and in Import and Export tasks in the Advanced mode.

-

Execute Query in Source Data Flow components and in Import and Export tasks in the Advanced mode.

-

Lookup in Lookup Data Flow components.

-

Insert in Target Data Flow components.

-

Update in Target Data Flow components.

-

Delete in Target Data Flow components.